As of December 2, 2015, the new Azure Portal is generally available. As Leon Welicki mentioned in the GA announcement, the release was driven by quality bars for usability, performance and reliability.

Today I'd like to explore and talk about what it takes to make a large single page application like the Azure Portal meet these high quality bars for scale, performance and reliability.

The challenges

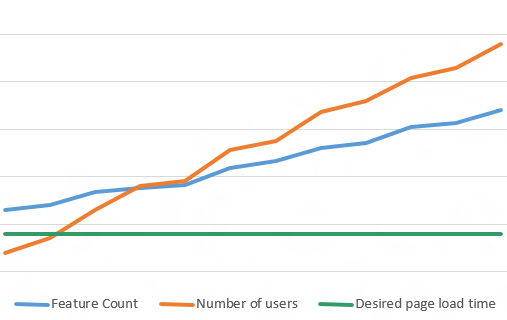

We've been adding features to the Azure Portal at a tremendous rate as we moved towards GA. As a result there was a continuous in-flow of code being executed on the client browser. And when this happens, it is easy to regress performance and reliability. Which is why it required us to have constant vigilance and regression control.

The Azure Portal consists of millions of lines of JavaScript written by hundreds of software engineers across several teams in Microsoft building experiences for various Azure services. Ensuring that all this code plays along well is a challenge in itself.

The Microsoft cloud is growing at a tremendous rate. There's new services being offered in the cloud all the time. Which is awesome. What it also means is that the challenge keeps getting bigger and bigger. As the scale increases, we still need to maintain the same levels of performance and reliability.

Having a strong foundation

A good system in place for an engineering team to function without friction is essential to building such a large-scale product. I'm reminded of Joel Spolsky's 12 steps to better code. While a tad outdated, a lot of those still apply. I'd like to elaborate on some of these

- Using source control - while this is important (and assumed), whats more important is having a simple well-understood branching strategy

- Single step build creation - I'll go a step further and say that in addition to this, it should be simple to iterate over changes and test. When you're writing a new feature, you shouldn't have to build the entire product. You should be able to simply recompile the component youre adding/modifying and test

- Daily builds - better yet, every times theres changes. In today's world, it is crucial to be agile and be able to ship quickly and with confidence in quality. Without automated builds on every check-in this would be extremely difficult

- Automated testing - In addition to having builds on every check-in, your functionality should also be verified every time there's changes to the code. In a large team, this means that manual testing is not something you can rely on

- Add tests along with features in the same check-in - This again is a crucial aspect for maintaining quality. Its the best way to ensure that functionality doesn't regress

- Have a good set of coding guidelines - geared towards readability, testability and performance. Note that I've put performance 3rd. That is because I feel that you should optimize for performance when you've identified bottlenecks. Otherwise you should optimize for highly-readable code. After all, somebody may have to go in there and fix a bug in your code, so be considerate.

- Tracking bugs - and prioritizing which bugs to fix first

Measure, Measure, Measure

Try to improve performance by modifying code without measuring first is a fool's errand. You may end up spending a lot of time optimizing a small portion of an operation and then realize that it never had a big impact on the overall performance of the feature in the first place. Measuring and identifying bottlenecks is the best way to figure out what the right investments are.

There's two aspects to this: -

- ** Identify the scenarios to improve:** Identify the experiences that are important to the product. While you might hope that you can get to everything, often that may not be the case. Analyze how users use the product, what they care about and how performant & reliable those scenarios are.

- ** Segregate into atomic operations:** For every scenario or experience that you target, you should be able to measure and figure out what the bottlenecks are. Some operations may have a large impact on the performance of the experience. Others may not.

Set targets

This goes hand-in-hand with measurement. You need to figure out how far you want to go. In fact, you may even choose to do this before starting measuring. Once you've set targets, your initial measurements provide you with a gap analysis - i.e. you have a baseline. This is very convenient as you can now track the progress you make as you check-in your optimizations.

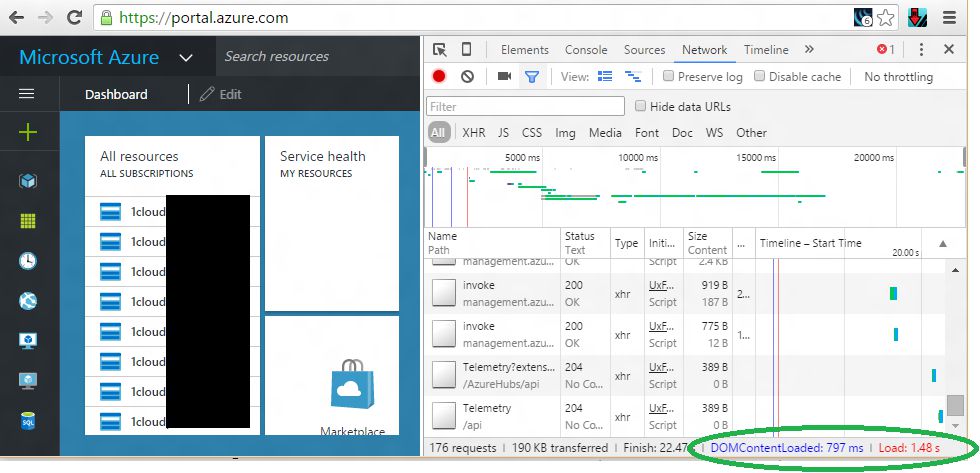

Lets take a look at two areas we've worked on recently - Page load times and ensuring fast experiences.

Page load time (PLT)

The Azure Portal is an SPA. And a really large one at that. It consists of multi-million lines of code that let you create, manage and monitor a wide variety of Azure services from Web Apps to SQL Servers to Virtual Machines. Ensuring a really fast page load time is both a necessity and a big challenge.

We targeted it from two points of view

- the median - to understand what the average user experiences

- 95th percentile - what's the worst experience that users get after eliminating the outliers.

We set goals for both these and started identifying potential solutions to get us there.

We started by targeting the usual suspects - the size of client-side code downloaded when the page is loaded in a browsser and the amount of network traffic on startup, both the number of network calls and the amount of data transferred in those calls.

Further, we identified which components and services needed to get initialized on startup and which of them could be deferred. This let us make the portal interactable to the user as quickly as possible.

By doing this we have been able to bring down the median for load times to the order of a few seconds.

Here's a screenshot of how long it took me to load the portal on my broadband connection today.

Ensuring fast and reliable experiences

Every experience in the portal such as Web Apps, Virtual Machines, Cloud Services, SQL Database, etc. is built as an extension to the Azure Portal. This enables the portal to lazy load experiences as and when need and also provides other benefits such as code isolation and flexible release cadences for each experience.

This also brings with it an interesting challenge. How do you ensure that each experience works seemlessly in the portal and in a reliable way. This was one of the key focuses as we made our way to general availability. The bar at 99.9% is high, and we've achieved it. The job's not over yet.

Once you reach this level of reliability, you need to work hard to ensure it stays there. Taking a granular look at the problem, it requires you to have telemetry in all the right places to detect errors, capture as much useful information as possible about them, and most importantly detect regressions. And address them quickly.

What's next

A lot of effort has been put in by the entire Azure Portal team to make the portal a fast, fluid and reliable experience to users. While this is a great start, there's always room for more improvement.

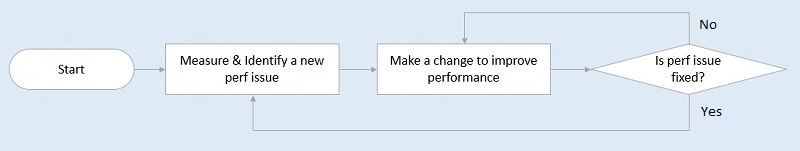

Here's a crude flowchart giving an over-simplified view for improving performance.

Stay tuned for more improvements to the Azure Portal.